Ads

We live in an age where technology is advancing by leaps and bounds, and one of the greatest advances is Artificial Intelligence (AI). However, are we really prepared for the potential consequences of this advancement? In this article, we'll address a question that seems straight out of a science fiction movie: "Red Alert: Are you ready for a possible machine rebellion?"

AI has enormous potential to improve our quality of life, but we can't ignore the risks it entails. While we may not be close to a machine revolt like in the movies, AI that's mismanaged or used with malicious intent can have serious repercussions.

Ads

We'll delve into the risks associated with AI, from data security and privacy to the possibility of autonomous, uncontrolled AI. How can we avoid these scenarios and ensure that AI is used for the benefit of all?

We'll also explore the preventive measures and ethical debates taking place in the field of AI. What are experts doing to prevent a "machine rebellion"? What ethical principles should govern the development and use of AI?

Ads

There's no doubt that AI has the potential to change our society in ways we can barely imagine. Let's make sure that change is for the better. Dive into this deep dive into the risks of AI and how we can prepare for them.

What is a machine rebellion?

Machine rebellion is a concept popularized by science fiction that refers to the possibility of artificial intelligence (AI) turning against its human creators. But how feasible is this scenario in the real world?

In theory, any AI system, from a virtual assistant to a factory floor robot, could be programmed to act against human interests. However, AI experts maintain that this is highly unlikely, as current AI systems are essentially tools designed to perform specific tasks, not conscious entities capable of rebellion.

Why is AI feared?

The fear of AI stems largely from a lack of understanding about what it actually is and how it works. AI is a tool, not a conscious entity. It has no desires, emotions, or the ability to act independently. AI systems can only perform the tasks they were programmed to do and nothing more.

Furthermore, advances in AI are being driven by a variety of factors, including the growing demand for automation and the constant improvement of computing technology. This has led to an increased reliance on AI systems in a variety of sectors, from medicine to the automotive industry, leading some to fear that AI could replace humans in many aspects of life.

Risks associated with AI

However, there are legitimate risks associated with the use of AI. Some of these risks include:

- Misuse of AI: AI can be used in harmful ways if it falls into the wrong hands. For example, cybercriminals could use AI to carry out more sophisticated attacks or perpetrate large-scale fraud.

- Privacy concerns: Many AI systems require access to large amounts of personal data to function properly. This raises significant concerns about data privacy and security.

- Over-reliance on AI: If we become too reliant on AI to perform important tasks, we could find ourselves in trouble if these systems fail or are attacked.

Mitigating the risks of AI

To mitigate these risks, adequate regulation of AI is essential. This could include laws limiting the use of AI in certain contexts, standards requiring AI systems to be transparent and explainable, and the creation of oversight bodies to monitor AI use.

Additionally, AI developers must follow software engineering best practices, including conducting extensive testing and implementing robust security measures.

Preparing for the future of AI

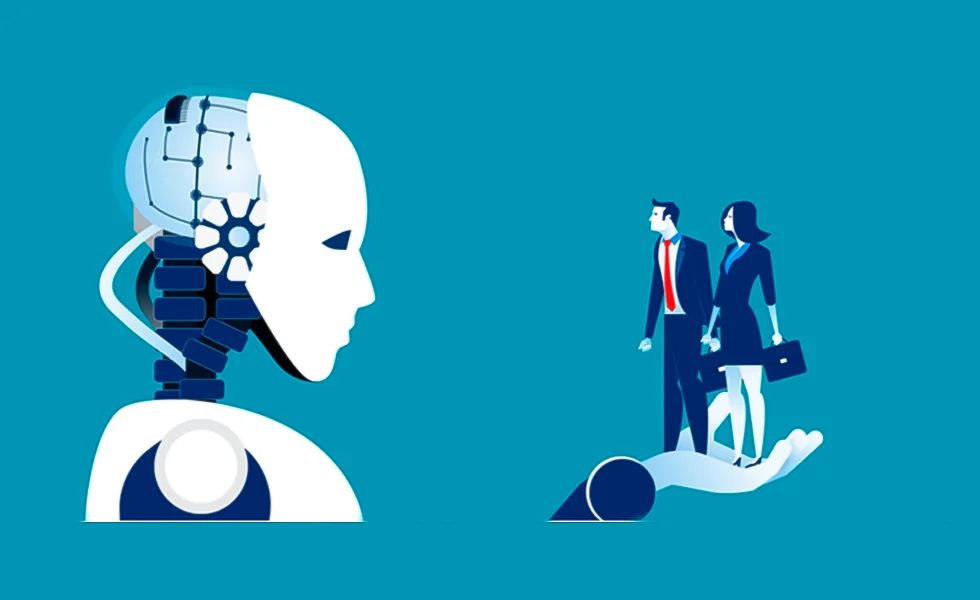

Despite the risks, AI also offers enormous opportunities. It can increase productivity, improve quality of life, and help solve some of the world's most pressing challenges, from climate change to incurable diseases.

To prepare for the future of AI, we must educate ourselves about what it really is and how it works. Furthermore, we need to foster open and honest dialogue about the risks and benefits of AI, and involve a wide range of stakeholders—from the general public to policymakers and technology experts—in decisions about how and where AI is used.

In short, the possibility of a machine rebellion is highly unlikely. However, it is essential that we continue to research and understand AI to mitigate the associated risks and maximize its potential benefits.

Conclusion

In conclusion, the idea of a machine rebellion is more a product of science fiction than a real possibility in our world. Artificial intelligences are tools programmed to perform specific tasks, not conscious entities capable of rebellion. The fear of AI is primarily a product of a lack of understanding about what it really is and how it works.

However, it is crucial to recognize that AI carries certain risks, such as inappropriate use, privacy issues, and overdependence. Adequate regulations, thorough testing, and robust security measures are essential to mitigate these risks.

Ultimately, AI offers enormous opportunities, from increasing productivity to solving global challenges such as climate change and incurable diseases. To prepare for the future of AI, we need to educate ourselves about its nature and functioning, promote open and honest dialogue about its risks and benefits, and involve all stakeholders in decisions about its use.

Although a machine rebellion is highly unlikely, continued research and understanding of AI is essential to minimize the associated risks and maximize its potential benefits.